ATD Blog

Act on Metrics

Thu Feb 19 2015

You’ve got numbers from your training. Now what? If you find that your data are unclear, or don’t seem to guide your next steps, you’re not alone. Let’s take care of that.

Preparation Counts

First, let’s review how to set yourself up for success with metrics. Here are the key steps to prepare for action:

Set measurable goals for the course. For example, start from learning objectives and set goals for how much people should learn. (See” Track Success with Metrics.”)

Find the features of the course that impact the goals. For example, use A/B testing to measure the effect on the course of including videos, or making videos of a certain type. ( See “Find the Levers with Metrics.”)

If you you’ve done these things, you’ll be ready for your next steps. If you didn’t do these things, and you’ve got a pile of numbers to make sense of, you’ve signed up to be a data miner. Now, mining data is an honorable profession, and if you have a background in statistics and a large volume of data (say, thousands of students), you could give it a try.

However, with the limited size of datasets that we typically get from training, it’ll be hard to find patterns that are significant. So, you’ll come out ahead if you have a system designed from the start around measurable goals and measurable features.

Course Focus vs. Portfolio Focus

Designing a course around measurable learning objectives may already be part of your design process. We are used to thinking about training in this way. And, this is work that can happen in isolation from other courses. For example, the learning objectives for sales training for Widget X probably have no relationship to the learning objectives for engineering training for Widget Y. This compartmentalization makes it relatively simple work to set measurable goals for courses.

To act on metrics, however, requires a portfolio view. To find the courses that need work, and to accumulate insights into what levers work best, is a long-term effort that spans many courses.

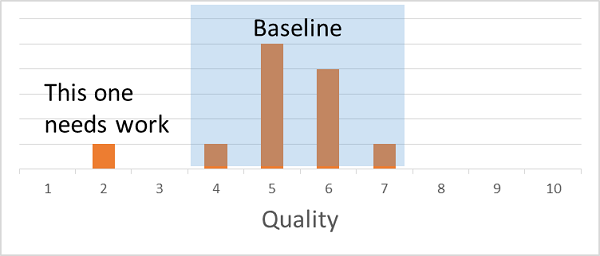

First, to find the courses that need work, we have to know what an underperforming course looks like. While we will have some external criteria for this, such as “X number of people need to get at least 80 percent of the quiz answers correct,” the best way to address this is to look across the portfolio and find the lowest courses. In other words, establish a baseline, then find the low outlier that should be prioritized for improvement.

Next, your experiments to find levers of quality (for example, through A/B testing) will be spread out across many courses. You won’t test every feature of every course, though. In fact, you might test only one or two features in each course to make the job manageable and data easier to interpret. As you accumulate a “bag of tricks” through your testing, you can apply things you've learned from one course to the design and troubleshooting of other courses.

Put Best Practices to Work

Congratulations—you’ve set yourself up with some well-designed goals and levers. Now, your next steps are relatively easy.

Redesign an existing course: If your data show that a course is underperforming, and it can be adjusted by one or more of your levers, consider redesigning and republishing. This assumes that course will continued to be delivered long enough to justify the investment, which you can ensure by checking your data early in the life of the course. In fact, it’s best to plan for iteration with any course: publish, collect data, adjust, and publish again.

Approach future courses differently: Keep track of the best practices that you uncover through testing, and apply them in the planning stage for new courses. More importantly, once you’ve established best practices, you’re going to include them in all of your new courses. What if one of these new courses still underperforms? At that stage, you can have a discussion about the level of investment in the course. Because even with knowledge about best practices, you’ll naturally make tradeoffs between what works best and what you can afford.

If the course is a priority for the business and it’s not working well, it may be time to add more high-end features, such as virtual instructors. And thanks to your work with metrics, you were able to point out that it wasn’t working, and you know what to suggest to improve it.

Share Best Practices

As your final action, share your findings with others. When you find what works best for your training, there’s a good chance it’ll be a benefit to other designers. Of course, mileage for a particular design choice may vary. Again, what you find works for engineers in your industry may not apply to another industry or to sales, for instance. So, also keep track of the demographics of your audience and include that information with your report.

In the long run, you could help lay the groundwork to make training a leading profession in the data-driven workplace of the 21st century.

More from ATD