Talent Development Leader

Governance Stems AI Risks

Leaders whose companies are using AI understand its pitfalls more than those who aren’t using the technology.

Mon Nov 13 2023

Leaders whose companies are using AI understand its pitfalls more than those who aren’t using the technology.

Most corporate leaders have either started using artificial intelligence in their organizations or are considering doing so. But have they all considered putting ethical guidelines and policies in place for handling such technology?

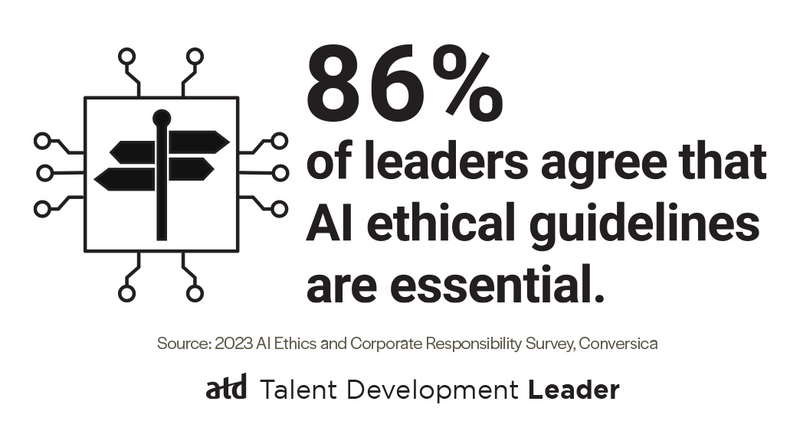

Conversica’s 2023 AI Ethics and Corporate Responsibility Survey assessed AI ethics and corporate responsibility by querying 500 US-based business owners, C-suite members, and other senior leaders.

When asked about existing or planned use cases for AI, company leaders mostly responded with cases involving “external engagement,” such as customer service and support, followed by use cases offering “insights,” such as data analytics, predictive modeling, and fraud detection. For companies already using AI, the top scenario for usage was indeed “insights.” Other use cases included internal engagement and process automation.

The greatest concerns that leaders have about AI, according to the survey, include the accuracy of data models, false information, and lack of transparency. However, the degree to which respondents voiced concern varied depending on whether their organizations use AI. That is, leaders in companies using AI are more concerned about the potential for lapses in the technology.

“For example, 21% \[of leaders at companies using AI\] (vs. 17% of the larger group of participants) cited concern over false information, and 20% (vs. 16%) were concerned about the accuracy of data models,” the report notes.

A similar gap exists among organizational leaders regarding the need for strong ethical guidelines and governance around AI’s usage. Of companies that have adopted AI, 86 percent of respondents agree that ethical guidelines are essential for the company and its leaders. That is 13 percentage points higher than that of the respondents as a whole.

Leaders do understand the importance of guidelines and appropriate policies. Unfortunately, per those surveyed, AI providers don’t offer resources about data security and transparency, nor have leaders been able to find an AI provider whose ethical standards align with their companies.

Going forward, companies should consider the potential security risks, regulatory violations, and brand damage—among other repercussions—of lagging behind on best practices relative to AI. Human oversight of AI can help stem potential risks, as can automated governance and training.

With the risks in mind, TechTarget—which offers data-driven marketing services to business-to-business technology vendors—curated a network of technology-related sites to help IT and business managers solve problems. The list includes AI Institute Now; the Institute for Technology, Ethics and Culture (ITEC) Handbook; and the ISO/IEC 23894:2023 IT-AI-Guidance on risk management standard.

Also cognizant of AI’s potential dangers and the need for policies, US President Joe Biden issued an executive order on AI for federal agencies last month. “Harnessing AI for good and realizing its myriad benefits requires mitigating its substantial risks. This endeavor demands a society-wide effort that includes government, the private sector, academia, and civil society,” Biden says.

Read more from Talent Development Leader.

You've Reached ATD Member-only Content

Become an ATD member to continue

Already a member?Sign In