Talent Development Leader

Prove It: Just What the Doctor Ordered

How Boston Scientific executed a skills-based feedback process

Mon Feb 24 2025

Bookmark

Solution: Design and automate a new learner assessment and pipeline to promote skills-based learning.

Business impact highlight: Six months after implementing the assessment, the team observed a 42 percent reduction of learners who were stalled in the training cycle.

The clinical education team within the urology division of Boston Scientific educates physicians on using products safely and effectively. As learning professionals, we strive to deliver world-class clinical education and training to healthcare professionals on a global scale through innovative and customized learning experiences.

Leadership identified a need to create thoughtful efficiency in the pipeline for training physicians to reach proficiency on a medical procedure involving one of the company’s products. Our objectives were to:

Design and complete an assessment for approximately 1,400 learners.

Create a method for tracking learners in the training pipeline.

Develop a way for new learners (20–30 expected per month) to enter the training process by establishing an automatic registration form.

Launch a new, skills-based training process.

Train the trainers and the business on the new process using an online playbook.

Automate as many of the above objectives as possible.

Resolution in motion

The team created a performance- and skills-based assessment that it could easily distribute and replicate. The assessment includes immediate, automated reporting and enables immediate and consistent feedback to the learner so that the clinical education team can adjust training until participants reach proficiency.

The process entailed:

Defining the problem

Conducting a two-day planning or assessment intensive

Holding daily stand-up meetings

Rolling out the new process

Measuring metrics and observing changes

Setting up for success

First, the team established core agreements—which include commitments for attendance and review of materials; goal setting via weekly emails; and Friday check-ins to summarize developments, look ahead to next steps, and, importantly, celebrate progress—to ensure engagement and participation.

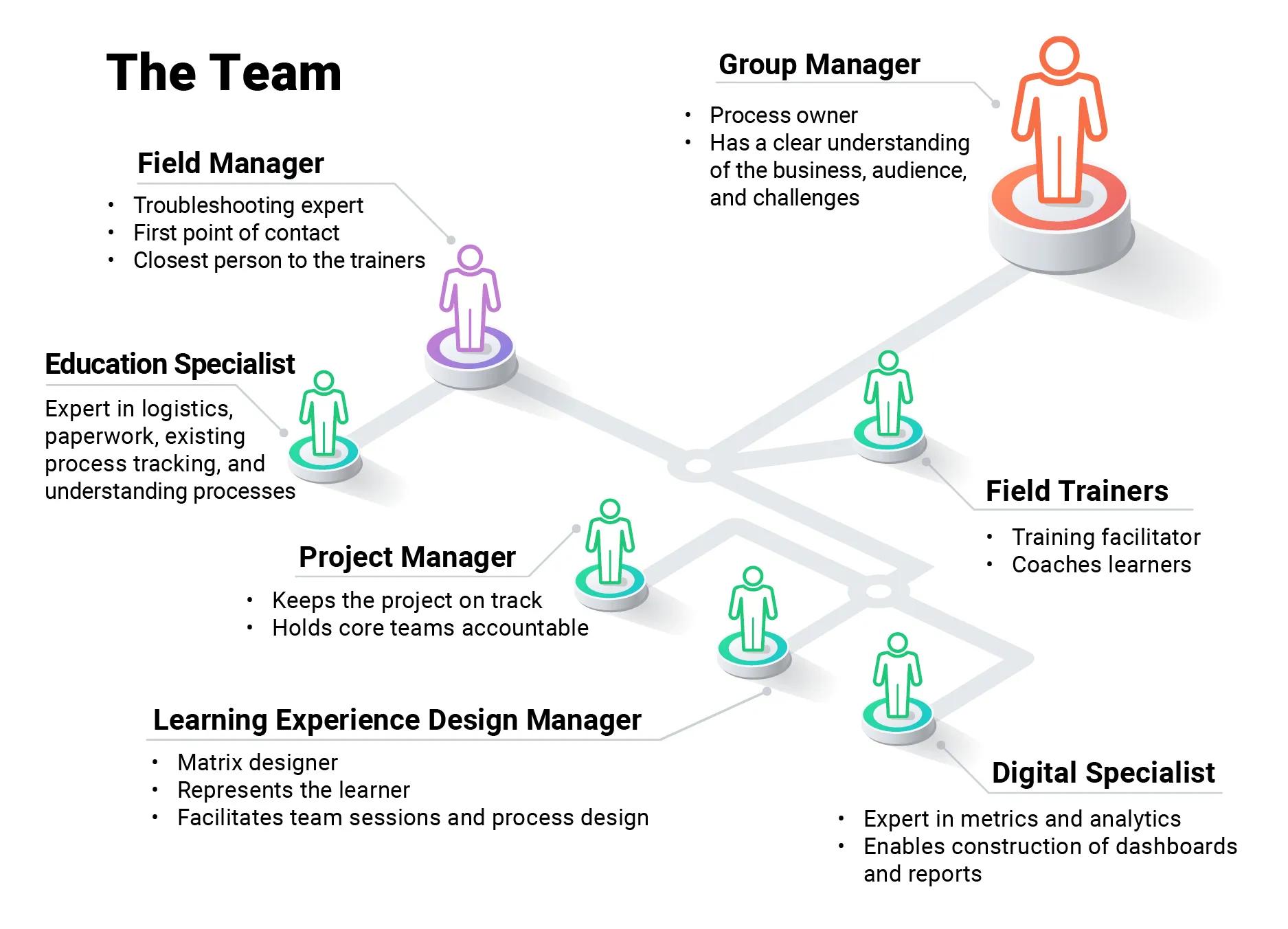

The team also determined who needed to be present for each part of the process. A project manager, an education specialist, a learning experience design manager, a digital specialist, two field managers, and a group manager formed the core project team.

Leadership tasked the team to develop a creative and measurable solution.

Adaptable and available

We adapted the automated, skills-based assessment from a preexisting company assessment that enabled sales representatives to train physicians.

The new assessment uses a set of criteria—essentially a rubric—upon which L&D evaluates and observes learners to establish progress and improvements. Criteria accounts not only for whether the learner can complete the procedure, but also for how adeptly they can do so. For example, does the learner need reminders or assistance with a skill? Or do they complete it confidently and in a timely manner?

A trainer evaluates each skill as either below expectation, satisfactory, or proficient against detailed criteria for each level. The project team’s agreement on benchmarks for each component was a key achievement due to the structured, repeatable approach to measurement. The process removes the potential for opinion-based standards and holds all learners to the same norms for proficiency. The evaluation is not just a check in a metaphorical box but truly defines to what degree of proficiency a learner achieves each skill.

The assessment uses an enterprise work and data management platform, which makes the form immediately available for the training team’s (or for other stakeholders’) review once an assessor completes it. We chose a technology solution that would collect, measure, and report data for the clinical education team, leaders, and potentially the learners themselves at each assessment point. Using the tool, trainers can take actions such as pivoting training approaches or sharing feedback with learners, and leaders can fine-tune team coaching and planning decisions.

We built an online playbook to direct trainers and the company as a whole through the process. The learner-driven playbook guides users on an as-needed or just-in-time basis.

Results and outcomes

The project was a success for several reasons. First, the planning and development process was faithful to principles of collaborative and engaged decision making, iterative and Agile approaches to design, and reliance on team expertise. Second, we took the time to clearly define the skills and the degree of each skill within the overall task. Finally, the assessment tool captures observable behaviors, and we automated data collection and reporting.

Due to the efficiency of the process and the consistency of feedback, learners progressed more quickly through the training pipeline. In fact, in six months, we observed a 42 percent reduction of learners who were stalled in the training cycle, a rate that we expected to take up to a year to achieve.

We implemented a data dashboard, which provided metrics around work scope, volume, and effort. The dashboard results equipped the education team with the necessary data to submit a request to leadership for additional team members. The data proved to leaders that, to sustain the success of the assessment approach, the team needed more members. In the days since, the approach to performance and skills-based assessments has expanded to other divisions at Boston Scientific.

More from ATD